What if a single AI model could revolutionize how we write, code, and automate the world around us? Meet Deepseek—a next-generation language model that doesn’t just keep up with the pace of innovation; it sets new standards. Designed to excel in natural language understanding, code generation, and automation, Deepseek promises to reshape industries and redefine how we interact with AI. Its cutting-edge architecture and remarkable versatility make it stand out in a crowded field, offering unparalleled performance where it matters most.

In this article, we’ll explore the core of Deepseek’s brilliance—its unique architecture, key features, and real-world applications across diverse sectors. Whether it’s transforming software development, enhancing education, or streamlining business processes, Deepseek is not just another AI model—it’s a glimpse into the future of intelligent technology.

Ready to dive in and discover what’s next for AI? Let’s get started.

What is Deepseek?

Deepseek is an advanced AI language model, distinguished by its innovative architecture and broad functionality. Engineered to excel in natural language understanding and generation, it supports a diverse range of applications, including software development, creative content creation, and business automation. Its ability to produce coherent, human-like text makes it an essential tool for developers, educators, and enterprises seeking cutting-edge AI solutions.

The Evolution of Deepseek: Version 3

The release of Deepseek v3 marks a significant milestone in its development, introducing key enhancements aimed at improving both performance and usability. One of the defining features of this iteration is its open-source framework, which allows developers and researchers to freely explore and adapt the model to specific use cases. This openness fosters innovation and encourages collaboration within the AI community.

Deepseek v3 has been extensively trained on a large, diverse dataset, enabling superior contextual understanding and more accurate response generation. Notably, it supports longer context windows, making it ideal for complex tasks such as multi-document analysis and advanced reasoning. In addition to general text processing, Deepseek v3 offers improved performance in specialized areas, including code generation, creative writing, and web search capabilities.

Comparative Advantage over Other Language Models

In an increasingly competitive landscape dominated by models such as GPT-4 and BERT, Deepseek v3 differentiates itself through a combination of architectural innovations and performance optimizations. Central to its design is the Mixture-of-Experts (MoE) architecture, which dynamically routes inputs to specialized processing units. This approach enhances efficiency by ensuring that only the most relevant parts of the model are engaged during computation, leading to improved scalability and task-specific performance.

Another key innovation is its auxiliary-loss-free balancing strategy, which helps maintain consistent performance as the model scales to handle more complex tasks. Additionally, Deepseek v3 leverages mixed precision training with FP8, a technique that improves computational efficiency without compromising output quality. These features position it as a highly competitive option for developers and organizations seeking robust, scalable AI solutions.

We’ve only begun to explore what Deepseek offers. In the following sections, we’ll take a deeper dive into its architecture, key features, and real-world applications, uncovering what truly sets this model apart.

The Unique Architecture of Deepseek

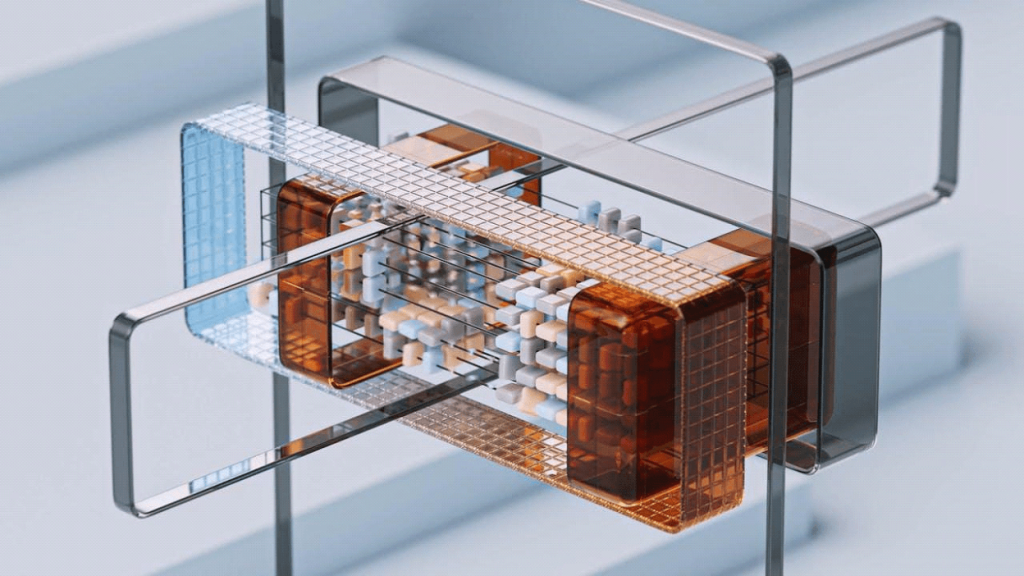

Deepseek’s architecture represents a sophisticated fusion of cutting-edge design principles and advanced optimization techniques, enabling it to deliver exceptional performance across a wide range of language processing tasks.

At its core, Deepseek builds upon the well-established transformer framework, enhanced by two key innovations: a Mixture-of-Experts (MoE) architecture and a Multi-Head Latent Attention (MLA) mechanism.

Transformer Foundation Overview

The transformer architecture has become the gold standard for modern language models due to its ability to efficiently process sequential data and capture complex contextual relationships. Deepseek employs a standard transformer block, which combines multi-head self-attention with feedforward neural networks, allowing the model to dynamically assess the relative importance of different tokens in a sequence. This dynamic weighting enhances the model’s contextual understanding, improving the quality of both text generation and comprehension tasks.

Mixture-of-Experts (MoE) Architecture

While Deepseek adopts the conventional transformer structure as its foundation, it departs from traditional dense transformer designs by integrating the Mixture-of-Experts (MoE) strategy. With this it is able to maintain a massive model size—671 billion total parameters—while activating only 37 billion parameters per token during inference. This selective activation mechanism optimizes resource usage and ensures that computational power is allocated where it is most needed, enabling high performance without unnecessary overhead.

The effectiveness of the MoE strategy lies in its dynamic routing mechanism, which determines how input tokens are processed by specialized expert models. Each token is intelligently routed to a specific subset of experts best suited to handle its particular context or task. This dynamic allocation ensures that only the most relevant parts of the model are engaged, enabling Deepseek to excel across a wide variety of applications while maintaining low inference latency and high throughput.

This approach offers several key advantages:

- Performance optimization: By leveraging task-specific experts, Deepseek achieves superior accuracy on complex tasks.

- Resource efficiency: Activating only a fraction of the total parameters reduces computational costs and memory usage.

- Scalability: The model can accommodate increasingly diverse tasks by adding more experts without a proportional increase in computational complexity.

Multi-Head Latent Attention (MLA)

Another critical component of Deepseek’s architecture is its Multi-Head Latent Attention (MLA) mechanism, which further enhances its efficiency, particularly during inference. Traditional attention mechanisms require storing extensive key-value pairs for each token, which can lead to significant memory consumption. Deepseek addresses this challenge by compressing key-value pairs into a latent representation, preserving essential information while drastically reducing memory requirements.

The MLA mechanism allows Deepseek to maintain high performance with lower memory usage, enabling it to process longer context windows more effectively. This capability is especially valuable for tasks that involve extensive document analysis or long-form content generation, where maintaining context across a large input is crucial.

By integrating MLA, Deepseek achieves:

- Reduced memory footprint: The compressed latent space minimizes storage requirements, enhancing efficiency.

- Faster inference: With fewer key-value pairs to manage, inference times are significantly reduced.

- Enhanced scalability: The ability to handle larger context windows makes Deepseek suitable for more demanding applications.

Deepseek’s architectural innovations—combining the transformer foundation with a Mixture-of-Experts framework and Multi-Head Latent Attention—position it as a top-tier language model, capable of outperforming many of its competitors. This intelligent design not only boosts performance across diverse tasks but also ensures flexibility, scalability, and resource efficiency, making Deepseek an ideal choice for modern natural language processing applications.

Five Key Features of Deepseek

Deepseek offers a comprehensive suite of advanced features that enhance its efficiency, scalability, and versatility across a wide array of language processing tasks. This section outlines the standout innovations that differentiate Deepseek from other language models.

- Auxiliary-Loss-Free Balancing

A hallmark of Deepseek’s architecture is its auxiliary-loss-free balancing strategy, which optimizes the load distribution across its expert models without relying on auxiliary loss functions. In traditional Mixture-of-Experts (MoE) systems, auxiliary losses are commonly used to balance expert utilization, but they often introduce unwanted gradients that can degrade overall model performance. Deepseek addresses this challenge with a dynamic biasing mechanism that adjusts routing scores in real time, ensuring balanced expert engagement.

This mechanism works by applying expert-specific biases before making top-K routing decisions. Underutilized experts receive higher routing scores, while heavily loaded experts have their scores reduced. This dynamic adjustment ensures optimal load balancing without sacrificing model accuracy or efficiency, preventing bottlenecks and enhancing the overall effectiveness of the MoE framework.

- FP8 Mixed Precision Framework

Deepseek’s FP8 mixed precision training framework represents a major leap forward in computational efficiency. By adopting an 8-bit floating point (FP8) format, Deepseek achieves faster computations and lower memory usage while maintaining high levels of accuracy. This allows the model to process large datasets and perform complex tasks with significantly reduced resource requirements.

The use of mixed precision is particularly advantageous when scaling large models like Deepseek. High-precision arithmetic, while accurate, can be computationally expensive. By balancing precision and efficiency with FP8, Deepseek not only accelerates training and inference but also lowers hardware costs, making it an appealing choice for developers and researchers working on large-scale AI applications.

- Multi-Token Prediction

Deepseek employs a multi-token prediction objective, which improves both performance and inference speed by enabling the model to predict multiple tokens simultaneously rather than sequentially. This approach enhances generation efficiency and enables speculative decoding, a technique that further accelerates inference by generating multiple candidate outputs in parallel.

Speculative decoding is particularly beneficial in real-time applications such as chatbots, virtual assistants, and other interactive AI systems, where low latency is critical. By reducing response times while maintaining high-quality outputs, this feature positions Deepseek as a top-tier solution for real-time AI-driven interactions.

- Extensive Training and Benchmark Performance

Deepseek’s capabilities are the result of a rigorous pre-training process on 14.8 trillion tokens, sourced from a wide range of diverse datasets. This extensive training enables the model to develop a nuanced understanding of language, which translates into exceptional performance across various benchmarks.

In evaluations, Deepseek consistently outperforms many leading language models on key benchmarks, including Massive Multitask Language Understanding (MMLU). Its strong performance spans multiple domains, from solving mathematical problems to generating high-quality code and supporting multilingual tasks. This versatility makes Deepseek a reliable solution for applications across industries, including education, technology, and business.

- Long Context Window Capabilities

One of the defining features of Deepseek is its support for long context windows of up to 128K tokens—a significant advancement over many existing models. This extended context capability is crucial for tasks requiring deep contextual comprehension, such as document summarization, legal analysis, and literature review synthesis.

By maintaining coherence and relevance over longer passages, Deepseek can effectively handle complex, large-scale content. This feature is particularly valuable for academic research tools, enterprise knowledge management systems, and content generation platforms where detailed context is essential. Whether it’s summarizing lengthy contracts or assisting in large-scale creative writing projects, Deepseek’s ability to work with extended inputs enhances its practical utility in real-world applications.

Deepseek’s key features collectively elevate its functionality and efficiency, setting a new standard in AI-driven language models. These innovations enable the model to compete with, and often surpass, existing models in terms of scalability, speed, and performance.

Five Popular Applications of Deepseek

Deepseek’s cutting-edge architecture and versatile feature set empower a broad spectrum of applications across diverse industries. Here’s a closer look at the key areas where Deepseek is making the greatest impact.

- Software Development

Deepseek redefines modern software development by automating routine tasks, accelerating code generation, and enhancing debugging processes. Its code generation capabilities, exemplified by Deepseek Artifacts, allow developers to create entire applications within seconds, complete with frameworks like React and Tailwind. This feature not only speeds up development but also integrates seamlessly with platforms such as CodeSandbox, enabling instant editing, collaboration, and deployment.

Beyond code creation, it aids in automated testing and quality assurance, quickly identifying potential bugs and suggesting relevant fixes. This streamlined approach reduces development cycles and improves overall software quality, freeing developers to focus on solving complex challenges rather than repetitive tasks.

- Education

In the education sector, Deepseek enhances both learning and teaching experiences by providing AI-driven personalized assistance. Students benefit from real-time feedback on coding assignments, enabling them to debug issues and grasp difficult concepts more effectively. This hands-on approach fosters deeper learning and boosts students’ confidence in their skills.

For educators, it automates time-consuming tasks such as grading and performance analysis, offering valuable insights into students’ progress. By supporting interactive learning environments where students can engage directly with AI-driven tools, Deepseek equips learners with practical skills vital for future careers.

- Business Automation

Deepseek excels in business automation, transforming how organizations handle repetitive processes and customer interactions. Its intelligent chatbots provide responsive, human-like customer support, reducing the workload on human agents and enhancing user satisfaction.

In addition to customer service, it offers powerful predictive analytics capabilities. By analyzing historical data, it helps businesses anticipate trends, optimize operations, and make data-driven decisions. Whether in finance, healthcare, or marketing, Deepseek’s ability to rapidly process and interpret large datasets ensures organizations can stay competitive in fast-paced markets.

- Data Analysis and Insights

Deepseek’s advanced data processing capabilities empower businesses and researchers to extract actionable insights from vast and complex datasets. Its intelligent search functionality allows users to quickly retrieve highly relevant information, making it a valuable tool for market research, strategic planning, and decision-making.

By recognizing intricate patterns and providing comprehensive analyses, it simplifies data-driven processes, helping teams unlock hidden opportunities and improve operational efficiency.

- Creative Applications

Beyond conventional applications, Deepseek offers exciting possibilities in creative fields, such as content creation, design, and even music composition. It can generate article drafts, provide innovative content ideas, or compose music based on specific prompts. This versatility opens up new frontiers for writers, designers, and musicians, blending AI-driven support with human creativity.

Whether used for brainstorming or automating parts of the creative process, it empowers professionals in artistic domains to explore new levels of productivity and innovation.

Deepseek’s diverse applications demonstrate its immense potential as a transformative AI tool. It’s not just enhancing how we work but reshaping what’s possible in the world of artificial intelligence.

Empower Your Future with Deepseek!

Deepseek marks a significant breakthrough in AI language modeling, offering an innovative architecture and robust feature set tailored for a multitude of applications. Whether it’s accelerating software development with intelligent code generation, enriching education through personalized learning, or optimizing business processes with automation, Deepseek stands out as a highly adaptable and powerful tool. Its support for long context windows and capacity to manage complex tasks further distinguishes it from other models, making it indispensable for developers, educators, and businesses seeking next-level AI solutions.

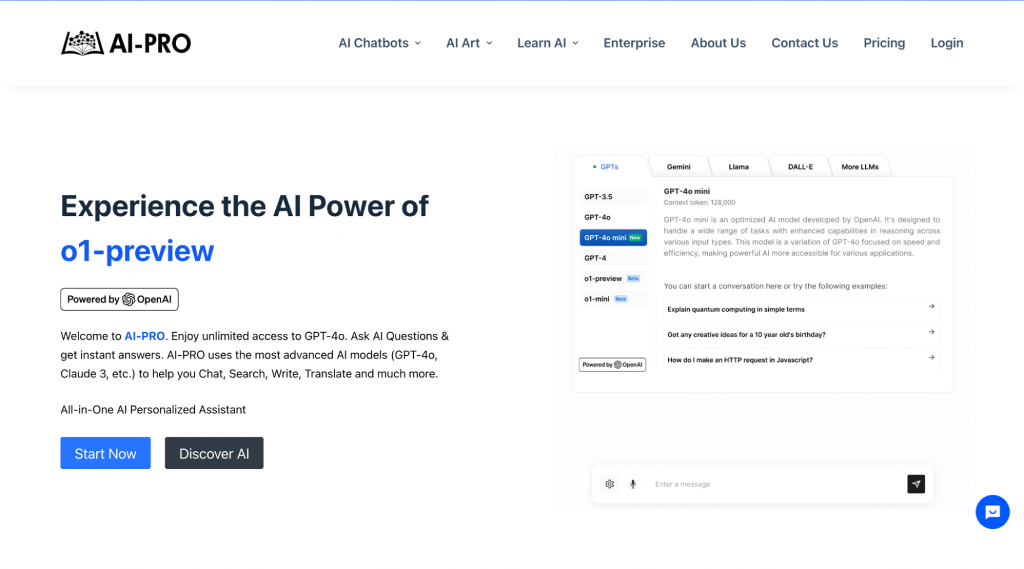

For those eager to maximize AI-driven potential, the AI-Pro Pro Max plan provides an ideal pathway. This plan grants access to Deepseek LLM Chat, enabling users to integrate Deepseek’s capabilities with other advanced models. Whether you aim to boost productivity, foster innovation, or drive better outcomes in your work, Deepseek equips you with the essential tools to succeed.

Don’t miss the chance to explore the transformative power of Deepseek. Join a growing community that’s leveraging cutting-edge AI to revolutionize workflows and shape the future of technology.