Stable Diffusion’s AI video generation has emerged as one of the most powerful new tools for communicating and storytelling in the digital media landscape. From captivating advertisements to educational tutorials and entertaining vlogs, videos are an integral part of our online experience. And being able to rapidly create them is a competitive advantage that many businesses cannot do without.

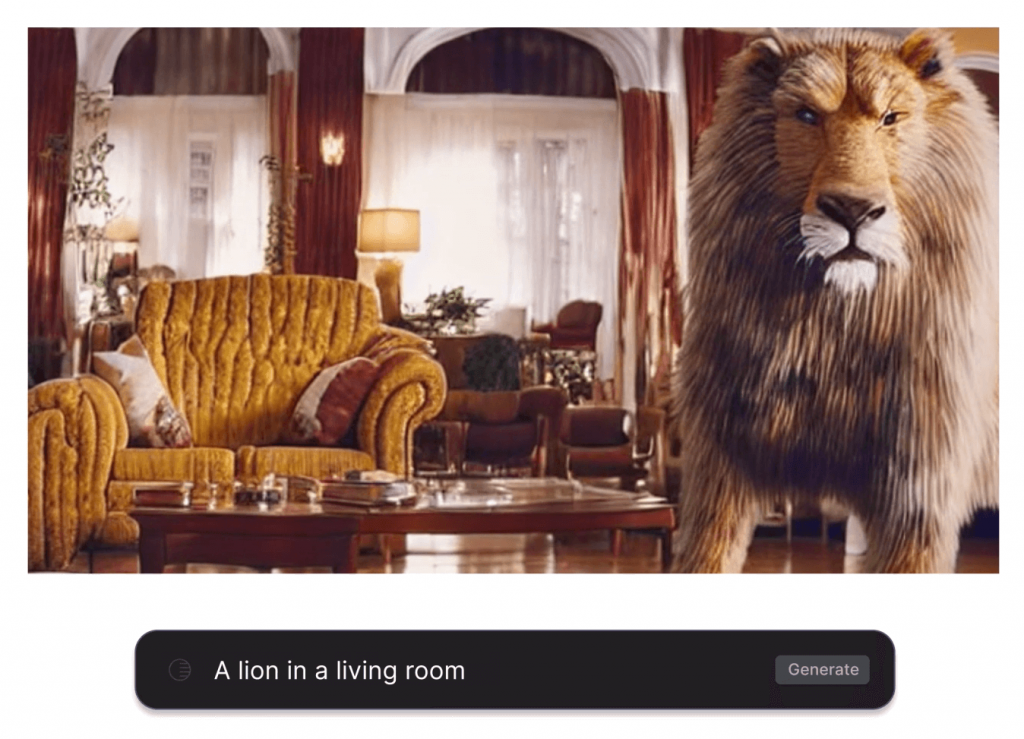

On March 20th, in San Francisco, Runway, the startup company that helped create the widely-used Stable Diffusion AI image generator, unveiled a new AI model. This model managed to create three seconds of video footage that matched any written description, for example, “a lion in a living room.”

Despite the groundbreaking nature of this technology, Runway has decided not to make their Gen-2 text-to-video model available to the public for safety and business reasons. Unlike the open-source Stable Diffusion, this model will not be made available to everyone. Initially, the Gen-2 model will only be accessible through a waitlist on the Runway website, and will be hosted on Discord.

While the idea of employing AI to produce videos from written descriptions is not novel, Meta Platforms Inc (META.O) and Google (GOOGL.O) both issued research papers on text-to-video AI models towards the end of the previous year. Nonetheless, according to Cristobal Valenzuela, the CEO of Runway, the distinction is that Runway’s text-to-video AI model is now accessible to the general public.

In the following sections of this article, we will delve deep into the fascinating world of AI video generation, exploring the technology’s capabilities, potential applications, and how it can revolutionize various industries. Whether you’re a content creator, marketer, or business owner, read on as we unlock the full potential of this game-changing innovation.

The Evolution of Video Content

To appreciate the significance of Stable Diffusion’s AI video generator, we must first acknowledge the remarkable journey that video content has undertaken. From the grainy, black-and-white films of the early 20th century to the high-definition, 4K masterpieces of today, video production has witnessed a relentless march of progress. The emergence of platforms like YouTube, TikTok, and Netflix has not only democratized video creation but also raised the bar for quality and creativity.

The Rise of AI in Video Production

As video content became more ubiquitous, the demands on content creators and marketers escalated. The need to produce engaging videos quickly and efficiently led to the integration of AI into video production processes. AI’s ability to analyze data, identify trends, and automate tasks has proven invaluable in streamlining workflows and optimizing content.

Overview of Stable Diffusion’s AI Video Generator

Stable Diffusion AI Video represents the latest evolution in this ongoing narrative. It’s not merely a tool; it’s a game-changer. This technology harnesses the power of AI to generate and enhance video content with unprecedented realism and quality. Whether you’re looking to transform raw footage into cinematic masterpieces or take your marketing videos to the next level, Stable Diffusion AI Video has the potential to be your secret weapon.

Understanding Stable Diffusion’s AI Video Generator

Now that we’ve set the stage for the AI revolution in video content, it’s time to dive deeper into the heart of this transformative technology.

What Is Stable Diffusion and How Does it Work?

Stable Diffusion is an advanced deep learning model developed in 2022 by researchers from the CompVis Group at Ludwig Maximilian University of Munich and Runway. This state-of-the-art technology primarily focuses on generating detailed images based on text descriptions but also excels in tasks like inpainting and outpainting.

At its core, Stable Diffusion is a diffusion model, a type of deep generative neural network. Its unique feature is its ability to condition the image generation process on text prompts. The model consists of three main parts: a variational autoencoder (VAE), a U-Net, and an optional text encoder. The VAE compresses images into a latent space, where Gaussian noise is iteratively added and removed during denoising. The U-Net denoises the image and the VAE decoder produces the final image.

What makes Stable Diffusion’s AI video generation stand out is its adaptability and efficiency. With 860 million parameters in the U-Net and 123 million in the text encoder, it’s considered relatively lightweight and can run on consumer GPUs, unlike its predecessors.

It was trained on a vast dataset, LAION-5B, which contains image-caption pairs from the web. This dataset was curated by LAION, funded by Stability AI, and the training involved significant computational resources.

The magic of Stable Diffusion lies in its sophisticated algorithms and neural networks. It operates by analyzing existing video content, learning from it, and then applying that knowledge to generate visually stunning videos from scratch or enhance existing ones.

Key to its functionality is the ability to maintain stability in video generation. This means you get high-quality, consistent results, whether you’re upscaling low-resolution footage, transforming photos into lifelike videos, or applying your unique artistic style.

Key Features and Benefits

In the rapidly evolving digital landscape, video content has become the reigning champion in engaging audiences, conveying messages, and telling compelling stories. Content creators, marketers, and businesses alike are continuously seeking innovative ways to harness the power of video. One technology that has been making waves in the realm of video generation is Stable Diffusion’s cutting-edge platform. In this article, we’ll delve into the key features and benefits of Stable Diffusion’s video generation technology and explore how it’s revolutionizing the way we create and use video content.

Unparalleled Realism

Stable Diffusion’s video generation technology stands out for its ability to produce hyper-realistic video content. By harnessing the power of deep learning and advanced algorithms, this platform can generate visuals that are almost indistinguishable from actual footage. Whether you need lifelike animations, realistic simulations, or convincing special effects, Stable Diffusion’s technology takes creativity to a whole new level.

Seamless Integration

One of the significant advantages of Stable Diffusion’s platform is its seamless integration into existing workflows and applications. Content creators can easily incorporate this technology into their video editing software, allowing for a smoother and more efficient production process. Whether you’re using Adobe Premiere, Final Cut Pro, or other popular video editing tools, Stable Diffusion ensures compatibility and simplicity.

Time and Cost Efficiency

Traditional video production can be a time-consuming and expensive endeavor. Stable Diffusion’s technology drastically reduces the time and resources needed to create high-quality video content. By automating various aspects of the video generation process, it minimizes the need for extensive shoots, costly equipment, and post-production work. This translates into substantial cost savings and faster turnaround times for projects.

Versatility and Customization

Stable Diffusion’s video generation technology is incredibly versatile, catering to a wide range of applications and industries. Whether you’re in e-commerce, entertainment, education, or marketing, this platform offers customization options that allow you to tailor the generated content to your specific needs. Adjust parameters such as style, tone, and visual elements to align with your brand and messaging.

Enhanced Creativity

The technology empowers content creators with tools to explore their creativity without limits. Generate unique and captivating video content by blending different styles, moods, and themes. Experiment with storytelling and visual aesthetics, pushing the boundaries of what’s possible in the realm of video creation. It’s a playground for imagination and innovation.

Scalability

Stable Diffusion’s video generation technology is designed to scale with your needs. Whether you’re a small business, a growing startup, or a large enterprise, this platform can accommodate your demands. As your video content requirements expand, you can rely on Stable Diffusion to maintain consistent quality and efficiency, ensuring your brand’s message reaches a broader audience.

Accessibility

The user-friendly interface and intuitive controls make Stable Diffusion’s platform accessible to individuals with varying levels of technical expertise. You don’t need to be a video production expert to harness the power of this technology. It democratizes the world of video creation, making it accessible to content creators from all backgrounds.

The Importance of Stable Diffusion AI in the Modern Digital Landscape

In a world where video content is king, staying ahead of the curve is paramount. Stable Diffusion’s AI video generator is not just a tool; it’s a strategic advantage. It empowers individuals and businesses to produce exceptional videos that resonate with audiences and drive results.

In the next sections, we’ll delve deeper into how you can get started with Stable Diffusion, explore its features, and share tips and best practices to help you make the most of this cutting-edge technology.

Maximizing the Potential of Stable Diffusion

Now that you have your foot in the door with Stable Diffusion, it’s time to explore how to unleash its full potential. Whether you’re aiming to create stunning videos or elevate your existing content, the following sections will guide you through the process.

Choosing the Right Style

One of the key strengths of Stable Diffusion’s AI video generator is its versatility in style. Your choice of style can radically transform the look and feel of your video content. Here’s how to make the most of it:

Custom Styles: For a truly unique touch, consider creating your own custom style. This allows you to tailor the AI’s output to match your brand’s identity or your personal artistic vision.

Matching Content to Style: Be strategic in your style selection. Consider the message or mood you want to convey with your video and choose a style that complements it. For example, a vintage style may work well for nostalgic content, while a futuristic style suits tech-related subjects.

Enhancing Video Quality

Stable Diffusion is designed to elevate video quality, ensuring your content shines. Here’s how to make the most of its video enhancement capabilities:

Upscaling: If you’re working with lower-resolution footage, utilize the upscaling feature. It can transform grainy or pixelated videos into crisp, high-definition masterpieces.

- In the realm of AI-driven video enhancement, Stable Diffusion and extensions, like Mov 2 Mov, play a pivotal role in elevating video quality. Stable Diffusion’s core strength lies in its ability to maintain consistency and realism throughout the generation process.

- When coupled with Mov 2 Mov, a sophisticated style transfer mechanism comes into play. This mechanism, guided by user-defined prompts and quality parameters, allows for precise control over the generated video’s style, motion, and overall visual appeal.

- ControlNet, an integral part of Mov 2 Mov, ensures that the motion within the video remains smooth and faithful to the source material, be it a dance performance or a posed shot. Moreover, the flexibility to choose from different checkpoint models further refines the generated video’s look and style.

In essence, this collaborative approach between Stable Diffusion and Mov 2 Mov facilitates the creation of captivating videos that not only preserve the essence of the source but also imbue it with a heightened sense of realism and artistic expression.

Noise Reduction: Remove unwanted noise or artifacts from your videos to achieve a clean, professional look.

- One of the parameters you can adjust in Stable Diffusion is the “denoising strength.” Denoising is a technique used to remove unwanted noise or artifacts from an image or video.

- By setting an appropriate denoising strength value, you essentially instruct the AI to apply a filtering process to the generated frames.

- This filtering helps to smooth out any inconsistencies or imperfections that may have arisen during the video generation process.

- Additionally, the use of ControlNet is crucial for maintaining smooth and consistent motion in the AI-generated video.

- ControlNet helps the AI understand and reproduce natural movements, reducing jitter or erratic motion that might introduce noise in the form of visual artifacts.

Frame Rate Adjustments: Optimize the frame rate to achieve the desired cinematic or smooth-motion effect.

- Stable Diffusion offers a potent toolkit for frame rate adjustment in videos, embodying a sophisticated synergy of AI capabilities.

- By first providing a video input featuring actions or dance performances, ControlNet plays a pivotal role in ensuring consistency, maintaining smooth character poses and movements.

- Employing prompts to guide creative direction, and selecting a specific checkpoint model like Realistic Vision 5.1 for influence, content creators meticulously fine-tune parameters such as sampling methods, denoising strength, and frame dimensions.

- The AI then undertakes a frame-by-frame processing approach, allowing for precise frame rate control. This is facilitated by the “Max Frames” setting, where content creators specify the desired frame count, directly impacting the resultant frame rate.

- The final step involves a critical review and potential fine-tuning of settings to achieve the envisioned frame rate, empowering content creators to realize their creative objectives seamlessly through AI-generated videos.

Ethical Considerations in AI Video Production

With the rise of AI in video production, ethical considerations have also become paramount:

Deepfakes and Misuse

In the ever-evolving landscape of AI video production, one of the most pressing ethical considerations revolves around deepfake technology and its potential for misuse. As AI-generated content becomes increasingly sophisticated, addressing this concern becomes paramount.

The challenge lies in finding effective ways to distinguish between genuine and AI-generated content. Developing robust authentication methods that can reliably identify AI-generated content will take center stage.

These methods will not only serve to protect against malicious use but also empower viewers to make informed judgments about the authenticity of the content they encounter. As we move forward, the ethical imperative is to strike a balance between the creative potential of AI and safeguarding against its misuse, ensuring that technology serves as a tool for empowerment rather than deception.

Data Privacy

The ethical considerations in AI video production extend to the fundamental aspect of data privacy. AI systems, including those used for video generation, rely heavily on vast datasets for training. In this context, safeguarding data privacy and ensuring compliance with stringent regulations are of paramount importance.

As AI continues to evolve, so will the need for ethical data handling practices. The responsible collection, storage, and utilization of data will be central to maintaining trust with both content creators and audiences.

By adopting robust data privacy measures and adhering to evolving regulations, the AI video production industry can uphold the highest ethical standards while harnessing the transformative power of technology.

Bias and Fairness

The quest for ethical AI video production also encompasses addressing biases and ensuring fairness in content generation. AI algorithms are not immune to bias, often reflecting the biases present in the data they are trained on.

To meet ethical standards, continuous refinement of these algorithms is essential. This process involves identifying and rectifying biases to ensure that content generated by AI systems is free from discriminatory or prejudiced elements.

Achieving fairness in content generation is not just a goal but a responsibility, as AI plays an increasingly influential role in shaping narratives and visual representations across various media. By prioritizing bias mitigation and fairness, the industry can forge a path where AI-driven video production aligns with ethical values and promotes inclusivity.

Transparency

In the ethical framework of AI video production, transparency emerges as a fundamental principle. Maintaining trust with audiences and content creators hinges on providing transparency in the creation process of AI-generated content.

Transparency entails making it clear when AI technology is involved in content production. It empowers viewers to understand how content is generated and allows them to make informed choices about the content they consume.

The ethical commitment to transparency is not only about building trust but also about fostering responsible content creation and consumption in an era where AI increasingly blurs the line between reality and artifice.

Regulations

Anticipating evolving regulations and guidelines is a key facet of ethical AI video production. As the industry continues to advance, regulations will evolve to govern the use of AI in content creation and protect against misuse.

Embracing these regulations is not a limitation but a safeguard, ensuring that the ethical principles of transparency, fairness, and privacy are upheld.

By proactively engaging with regulations, the industry can demonstrate its commitment to responsible AI use and the ethical imperative of ensuring technology serves the best interests of society. In the ever-changing landscape of AI video production, ethical considerations will continue to shape the industry’s path, fostering a future where innovation and ethics go hand in hand.

The future of Stable Diffusion’s AI video generator is incredibly promising, with exciting developments on the horizon. As this technology matures and becomes more accessible, it will continue to reshape industries, redefine creative possibilities, and empower individuals and businesses to achieve new heights in video content production.

Create Videos with Stable Diffusion Today

Ready to reshape your video content creation with cutting-edge AI-powered video production? It’s time to make your move and tap into the remarkable capabilities of Stable Diffusion’s AI video generation. But that’s not all – if you’re eager to have the tools to boost your productivity and stay updated with the latest news and information in the AI industry, don’t forget to visit AI-pro.org’s website.

Once you’re in the driver’s seat, embark on an adventure of endless possibilities. Elevate your videos, craft mesmerizing visuals, and ensure your content shines in the digital realm. Keep a vigilant eye on the ever-evolving world of AI video production.

As technology leaps forward, so does your creative canvas. Stay ahead of the curve and keep those innovative juices flowing. Your journey could serve as the spark that inspires others to unlock their creative genius with Stable Diffusion.

Frequently Asked Questions (FAQs)

Have questions about Stable Diffusion? We’ve got you covered. Explore our FAQs to find answers to common queries:

Q1: What is Stable Diffusion?

Stable Diffusion’s AI video generator is a cutting-edge technology that utilizes artificial intelligence to generate and enhance video content. It offers realistic video generation, high-quality upscaling, and customizable styles.

Q2: How does Stable Diffusion AI differ from traditional video editing software?

Traditional video editing software relies on manual processes, while Stable Diffusion automates and enhances video production using AI algorithms. It can generate realistic content and upscale videos with ease.

Q3: What are the system requirements for using Stable Diffusion AI?

System requirements may vary, but typically, you’ll need a computer with sufficient processing power, memory, and a compatible operating system.

Q4: Can I use Stable Diffusion AI for both personal and commercial projects?

Yes, Stable Diffusion is versatile and suitable for both personal and commercial use.