Robot wearing an engineer outfit

Context Engineering: The New Frontier in AI System Design

teamwork concept illustration

What Is Context Engineering?

The field of artificial intelligence (AI), especially in the domain of large language models (LLMs) like GPT-4, has seen an explosion of interest in prompt engineering. However, as LLMs become more capable and expectations for their performance rise, a new discipline has emerged: context engineering. This practice is rapidly becoming the essential skill for anyone working with advanced AI systems.

The Evolution from Prompt Engineering

Prompt engineering refers to crafting inputs—prompts—that guide LLMs to produce desired outputs. While effective prompts are crucial, they represent only one layer of interaction. As models have grown more powerful and their context windows (the amount of input they can “see” at once) have expanded, a new challenge has arisen: what information should be included in that window, and how should it be structured?

This is where context engineering comes in. It’s not just about writing a clever prompt; it’s about designing the entire experience and information ecosystem that the model uses to produce its outputs. As Phil Schmid summarized, “Context engineering is the discipline of designing and building dynamic systems that provide the right information and tools, in the right format, at the right time for LLMs and AI agents.”

Defining the Context Window

At the heart of context engineering lies the context window: the segment of text, data, or information fed into an LLM for each inference or generation. The context window has a finite size—often measured in tokens (a rough analog to words). For example, GPT-4 can process up to 128k tokens in its context window, but this is still a constraint when dealing with vast knowledge bases, documents, or live data.

Context engineering is the art and science of filling that window with the most relevant, timely, and useful information, arranged so the AI can utilize it effectively. Unlike prompt engineering, which focuses on “what question do I ask and how do I ask it?”, context engineering focuses on “what background, data, and supporting information do I provide so the model can give the best possible answer?”

Why Context Engineering Matters for AI and LLMs

hand drawn flat design innovation concept

Tackling Limitations of Prompt Engineering

Prompt engineering alone struggles with complex, real-world scenarios. As tasks get more sophisticated—legal analysis, enterprise search, multi-step reasoning—the information needed to answer a question well may be scattered across many sources, or embedded in large documents. Simply crafting a better prompt won’t help if the model lacks access to the right context.

Context engineering addresses the following limitations:

- Information Overload: LLMs can’t process all possible relevant data at once. Context engineering prioritizes what matters most.

- Relevance: The quality of an LLM’s output is highly dependent on the quality and relevance of its context.

- Consistency: Proper context structuring ensures consistent results, especially in dynamic or multi-turn conversations.

- Scalability: As use cases grow in complexity, context engineering becomes the only viable way to build robust, enterprise-grade AI systems.

Real-World Impact: Context Engineering in Action

To illustrate, consider an AI assistant designed for legal research. A simple prompt like “Summarize the relevant case law for this dispute” is insufficient. The LLM needs access to statutes, previous cases, facts of the current case, and even user preferences. Context engineering involves:

- Selecting the most applicable documents

- Summarizing or compressing them to fit the context window

- Arranging information so the LLM can synthesize and reference it efficiently

The result is a system that dramatically outperforms one relying on prompt engineering alone.

Core Principles of Context Engineering

woman using mobile scan face eye

Context engineering is not just an ad hoc process. It’s a discipline underpinned by clear principles that, when followed, maximize the value of LLM-powered applications.

Relevance and Selectivity

The most fundamental principle is relevance. Every token in the context window is precious. Context engineers must:

- Filter out irrelevant or redundant information

- Prioritize content based on its importance to the current task

- Adapt context dynamically as the conversation or use case evolves

Structure and Formatting

How information is presented matters as much as what is presented. Key considerations include:

- Ordering: Placing the most critical information first

- Formatting: Using tables, bullet points, or special tokens to guide the model’s attention

- Separation: Distinguishing between facts, instructions, and references

Proper structuring helps the LLM parse and utilize context more effectively.

Dynamic Adaptation

Context isn’t static. In multi-turn conversations or systems that react to real-world changes, context must be dynamically engineered:

- Updating facts and user preferences as the session progresses

- Incorporating new data streams (e.g., real-time market data, sensor inputs)

- Managing memory and history efficiently

How to Apply Context Engineering: Best Practices and Techniques

![]()

diverse friends holding checkmark icons

Data Gathering and Curation

Start by identifying all potential sources of information relevant to your task or domain:

- Internal documents, databases, or APIs

- Previous user interactions or preferences

- External sources (web, news, scientific literature)

Curate these sources, filtering out noise and selecting the most authoritative or current content.

Contextual Compression and Summarization

Given the context window’s size limit, raw data often won’t fit. Apply techniques such as:

- Summarization: Use LLMs or extractive methods to condense long documents

- Fact extraction: Pull out key facts, dates, or entities

- Chunking: Break large inputs into smaller, manageable pieces for iterative processing

Some advanced systems use recursive summarization, where summaries of summaries are created to further compress information.

Tooling and Automation

Manual context engineering is unsustainable for most real-world applications. Leverage tools such as:

- Retrieval-Augmented Generation (RAG): Systems that retrieve relevant snippets from a knowledge base in real time

- Vector databases and semantic search: Find contextually similar documents or passages

- Automated context builders: Frameworks like LangChain, LlamaIndex, or custom pipelines that assemble context dynamically

These solutions automate much of the context selection and structuring process.

Evaluating and Iterating Context

Continuous improvement is essential. Evaluate your context engineering pipeline by:

- A/B testing different context configurations

- Analyzing LLM output quality and relevance

- Collecting user feedback on system performance

Iterate on your approach, refining selection criteria and structuring as needed.

If you’re fine-tuning context strategies, understanding the balance between fine-tuning and in-context learning is key.

Common Pitfalls and Challenges in Context Engineering

man jumps through gap

Overfitting and Irrelevance

Including too much information, or the wrong kind, can cause:

- Distraction: The model may focus on less important details.

- Contradiction: Conflicting data can confuse the model.

- Overfitting: Custom contexts that work for one case may not generalize.

Careful curation and ongoing validation are key to avoiding these issues.

Computational Constraints

Building, summarizing, and formatting context—especially in real time—can be computationally intensive. Engineers must balance:

- Latency: Fast retrieval and context assembly are critical for responsive applications.

- Cost: Each LLM call, summarization, or database query can incur costs.

- Scalability: Systems must handle multiple users and sessions efficiently.

Optimizing pipelines and leveraging caching or pre-processing can help.

Human-AI Collaboration Issues

Context engineering is not just a technical task. It requires deep domain knowledge and understanding of user needs. Pitfalls include:

- Misunderstanding user intent: Leading to irrelevant or incomplete context.

- Insufficient transparency: Users may not know what information the AI is using.

- Bias in selection: Human engineers’ choices can shape (or distort) model outputs.

Close collaboration between domain experts, AI engineers, and users is essential.

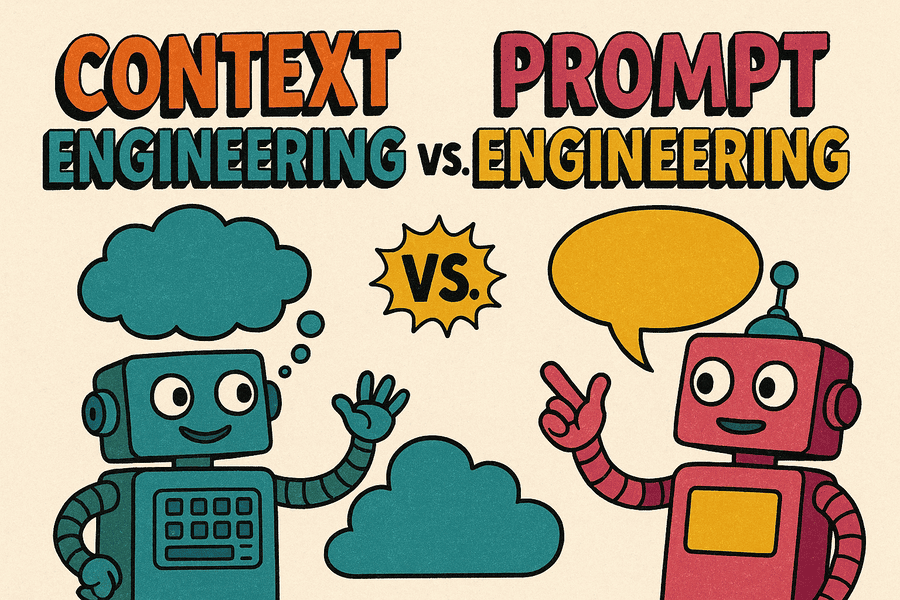

Context Engineering vs. Prompt Engineering: Key Differences

Context Engineering vs Prompt Engineering

Use Cases and Examples

|

Feature |

Prompt Engineering |

Context Engineering |

| Scope | Focuses on crafting the input query or instruction | Focuses on curating and structuring the supporting information |

| Complexity | Effective for simple, self-contained tasks | Essential for multi-step, data-rich, or dynamic tasks |

| Example | “Write a poem about the ocean.” | Provide a summary of the latest marine research, user’s preferred poetic style, and relevant facts about the ocean before asking for the poem |

| Tools | Prompt templates, few-shot examples | RAG, vector databases, summarization pipelines, automated context builders |

| Limitations | Suffers when knowledge needed is not in the prompt or model weights | Can be computationally expensive and complex to manage |

When to Use Each Approach

- Prompt engineering remains ideal for rapid prototyping, simple queries, or well-defined tasks where all necessary information can be included in a single prompt.

- Context engineering is indispensable for advanced applications—enterprise AI, research assistants, customer support bots, and any scenario involving dynamic or large-scale knowledge.

Increasingly, the two approaches are complementary, but context engineering is becoming the dominant practice for serious AI deployments.

The Future of Context Engineering

futurism perspective digital nomads lifestyle

Evolving LLM Capabilities

As LLMs’ context windows grow (OpenAI’s GPT-4 Turbo, Anthropic’s Claude, etc.), the potential for richer, more nuanced context engineering expands. This will enable:

- More comprehensive reasoning

- Personalized experiences at scale

- Integration with real-time data streams

However, the challenge of curating and structuring context will remain, if not grow, alongside these advances.

The Role in Autonomous Agents

Next-generation AI systems are increasingly autonomous agents—LLMs that plan, execute, and adapt to achieve complex goals. Context engineering is fundamental here:

- Memory management: Agents must remember and prioritize information over long sessions.

- Goal adaptation: Context changes as objectives shift.

- Tool use: Agents may need to fetch, process, or synthesize data from multiple sources on the fly.

Effective context engineering is what will separate powerful, reliable AI agents from unreliable or hallucination-prone ones.

Getting Started with Context Engineering

learning education studying concept

Skills, Tools, and Resources

Key skills:

- Information retrieval and text processing

- Summarization techniques (extractive and abstractive)

- Prompt engineering fundamentals

- Familiarity with LLM APIs and context management

Essential tools and frameworks:

- LangChain: Modular framework for chaining context and LLM calls

- LlamaIndex: Powerful for building context-aware document retrieval systems

- Vector databases (Pinecone, FAISS, Weaviate): For semantic search and retrieval

- RAG pipelines: Retrieval-augmented generation architectures

- OpenAI, Anthropic, Cohere APIs: For LLM access and context window management

Community and Learning Paths

Where to learn:

- Phil Schmid’s blog: Leading resource on context engineering

- LangChain documentation and tutorials

- Hugging Face forums and community discussions

- OpenAI and Anthropic developer guides

- Reddit threads and AI newsletters (e.g., natesnewsletter.substack.com)

How to practice:

- Build small demo apps that use RAG or context assembly for specific tasks

- Participate in hackathons or open-source projects focused on LLMs

- Engage with the community to share context engineering patterns and learn from others’ experiences

As context engineering gains traction, it’s also critical to understand the foundational differences between LLMs and broader generative AI systems. Our article on the difference between LLMs and Generative AI offers deeper insight into how these technologies diverge—and why context matters more in some than others.

Conclusion: Why Context Engineering Is the Next Essential AI Skill

In the fast-evolving world of artificial intelligence, context engineering is emerging as the new essential skill—surpassing prompt engineering in its impact and reach. As LLMs become more capable, the bottleneck shifts from how we ask questions to how we supply and structure the information these models need to excel.

From enterprise search to autonomous agents, robust context engineering is what enables AI to deliver consistent, accurate, and contextually aware results. Whether you’re a developer, data scientist, or AI product manager, mastering context engineering will be critical to building the next generation of intelligent systems.

As you embark on your own context engineering journey, remember: the goal is not just to feed more data into your models, but to curate, compress, and structure the right information, at the right moment, in the right way. In doing so, you’ll unlock the full potential of AI—transforming it from a black box into a true collaborator.