In recent years, Large Language Models (LLMs) have revolutionized the field of natural language processing (NLP), transforming how we interact with technology and access information. These sophisticated algorithms, capable of understanding and generating human-like text, have found applications across various domains, from customer service automation to creative writing and beyond.

As organizations increasingly rely on LLMs to enhance their operations and improve user experiences, the need for a comprehensive understanding of these models becomes paramount.

This article presents a detailed comparison of all LLMs, exploring the leading players in the market, their unique features, and their respective strengths and weaknesses. By examining the evolution of LLMs and their underlying technologies, we aim to provide readers with a clear framework for evaluating which model best suits their specific needs.

Whether you’re a developer seeking to implement an LLM in your project or a business leader looking to harness AI capabilities, this guide will equip you with the knowledge necessary to navigate the complex landscape of large language models. Join us as we delve into the intricacies of LLMs, uncovering what sets them apart and how they can be leveraged to drive innovation in an increasingly digital world.

The Basics of Large Language Models

Large language models represent a significant advancement in the field of artificial intelligence, particularly in natural language processing (NLP). To fully appreciate their capabilities and potential applications, it is essential to understand what LLMs are, how they function, and their historical development. This section will provide a foundational overview of the technology, including their definition, functionality, and evolution.

The Purpose of LLMs

At its core, LLMs are artificial intelligence systems designed to understand, generate, and manipulate human language. These models are built using deep learning techniques, particularly neural networks known as transformers. A transformer architecture enables them to process vast amounts of text data efficiently and effectively, capturing complex patterns and relationships within the language.

LLMs are trained on diverse datasets that encompass a wide range of topics, styles, and contexts. This extensive training allows them to perform various tasks, including:

- Text Generation: Creating coherent and contextually relevant text based on input prompts.

- Text Completion: Predicting and completing sentences or paragraphs.

- Translation: Converting text from one language to another with high accuracy.

- Sentiment Analysis: Identifying the emotional tone behind a piece of text.

- Question Answering: Providing accurate responses to user queries based on the information available.

The ability of LLMs to generate human-like text has made them invaluable tools for businesses, researchers, and content creators alike.

Evolution of LLMs

The journey of LLMs began with simpler statistical methods for language processing but has rapidly evolved into the sophisticated architectures we see today. Here are some key milestones in the evolution of LLMs:

- Early Days: The initial approaches to NLP relied heavily on rule-based systems and statistical models that could analyze text but struggled with context and nuance.

- Introduction of Neural Networks: The advent of neural networks in the late 2000s marked a turning point. These models allowed for more complex representations of language but were limited in scale.

- The Transformer Model: In 2017, the introduction of the transformer architecture by Vaswani et al. revolutionized NLP. This model utilized self-attention mechanisms that enabled it to weigh the importance of different words in a sentence dynamically. Transformers paved the way for larger and more capable models.

- Rise of Pre-trained Models: The development of pre-training techniques allowed models like BERT (Bidirectional Encoder Representations from Transformers) to learn from vast amounts of unlabeled data before being fine-tuned for specific tasks. This approach significantly improved performance across various NLP benchmarks.

- Current State: Today, models such as OpenAI’s GPT series and Google’s PaLM represent the pinnacle of LLM development. These models boast billions of parameters and have been trained on diverse datasets, enabling them to generate remarkably human-like text.

As we continue to explore LLMs, understanding their foundational principles and historical context will provide valuable insights into their capabilities and future potential. In the following sections, we will delve deeper into the major players in this field and examine how they compare against one another.

Major Players in the LLM Landscape

The landscape of LLMs is populated by several key players, each contributing unique innovations and capabilities. Understanding these major models is crucial for anyone looking to leverage LLM technology effectively. This section delves into the leading organizations and their flagship models, highlighting their distinctive features and applications.

OpenAI

OpenAI has emerged as a frontrunner in the LLM arena, particularly with its Generative Pre-trained Transformer (GPT) series.

- GPT-3: Launched in 2020, GPT-3 boasts 175 billion parameters, making it one of the largest models available at the time. Its ability to generate coherent and contextually relevant text has made it a popular choice for various applications, including content creation, chatbots, and coding assistance.

- GPT-4: The subsequent iteration, GPT-4, further enhances capabilities with improved reasoning and contextual understanding. It supports multimodal inputs, allowing users to interact with the model through both text and images.

OpenAI’s focus on safety and ethical AI use is evident in its deployment strategies, which include robust moderation tools to prevent misuse.

Google has been a pioneer in LLM research since the introduction of the transformer architecture, which laid the groundwork for many subsequent models.

- BERT (Bidirectional Encoder Representations from Transformers): Released in 2018, BERT revolutionized how machines understand context in language by processing text bidirectionally. This model excels at tasks like question answering and sentiment analysis due to its deep contextual understanding.

- T5 (Text-to-Text Transfer Transformer): T5 adopts a unified framework where all NLP tasks are converted into a text-to-text format. This versatility allows it to handle diverse tasks such as translation and summarization effectively.

- PaLM (Pathways Language Model): With an impressive scale of 540 billion parameters, PaLM demonstrates state-of-the-art performance across various benchmarks. Its architecture integrates syntactic and semantic understanding, enabling it to perform complex reasoning tasks effectively.

- Gemini: Announced on December 6, 2023, Gemini is a family of multimodal large language models developed by Google DeepMind, serving as the successor to LaMDA and PaLM 2. Gemini includes several versions—Gemini Ultra, Gemini Pro, Gemini Flash, and Gemini Nano—each optimized for different use cases. Unlike traditional LLMs that primarily process text, Gemini is designed to be multimodal, meaning it can understand and generate not just text but also images, audio, video, and code.

Meta AI

Meta AI’s contributions to the LLM landscape include the development of models like LLaMA (Large Language Model Meta AI).

- LLaMA: This series ranges from 7 billion to 65 billion parameters and is trained on publicly available datasets. Notably, LLaMA-13B has shown superior performance compared to GPT-3 across multiple benchmarks, emphasizing that high performance can be achieved without proprietary data sources.

Anthropic

Anthropic focuses on creating LLMs that prioritize safety and alignment with human values.

- Claude: Named after Claude Shannon, this model emphasizes ethical considerations in AI deployment. It aims to minimize harmful outputs while maintaining high performance across various language tasks.

Mistral

Mistral AI is making significant strides in the LLM landscape with its focus on efficiency and speed.

- Mistral Large: This model is designed for high-complexity tasks while maintaining rapid processing speeds. It features a maximum context window of 32k tokens, allowing it to handle extensive documents effectively. Mistral Large excels in generating coherent text while also performing well on various industry benchmarks.

- Mistral 7B: Aimed at real-time applications requiring quick responses, Mistral 7B outperforms many larger models in several benchmarks despite its smaller parameter count of 7 billion. It employs advanced attention mechanisms like grouped-query attention (GQA) for faster inference and sliding window attention (SWA) for handling longer sequences efficiently.

- Functionality: Mistral models are equipped with capabilities such as contextual understanding, sentiment analysis, translation, summarization, and code generation. They are particularly noted for their multilingual support across several languages including English, French, Spanish, German, and Italian.

- Customization: Mistral allows fine-tuning for specific domains or tasks such as medical or legal applications. This adaptability enhances its performance in specialized fields.

Comparative Analysis of Leading LLMs

As the LLM field evolves, understanding how these models stack up against each other is crucial for making informed decisions. This section provides a comparative analysis of leading LLMs, focusing on their performance metrics, suitability for various applications, and key features in an accessible manner.

Performance Metrics

When evaluating LLMs, several performance metrics help gauge their effectiveness across different tasks. Here are some of the most relevant metrics:

- Perplexity: This measures how well a model predicts the next word in a sentence. A lower perplexity score indicates better performance, meaning the model is less “confused” when generating text.

- Accuracy: This straightforward metric assesses how often the model’s predictions match the correct answers. High accuracy is crucial for tasks like question answering and classification.

- F1 Score: This combines precision (the proportion of relevant results among retrieved results) and recall (the proportion of relevant results successfully retrieved). A high F1 score indicates that the model performs well in both areas.

- Human Evaluation: While automated metrics are useful, human judgment remains vital. Evaluators assess qualities like fluency, coherence, and relevance to ensure that responses sound natural and are contextually appropriate.

Use Case Suitability

Different models excel in various applications based on their design and training data. Here’s a breakdown of some leading models and where they shine:

- OpenAI’s GPT-4:

- Best For: Creative writing, chatbots, and coding assistance.

- Strengths: Known for generating engaging and coherent text across diverse topics, making it versatile for many applications.

- Google’s Gemini:

- Best For: Multimodal tasks that require integration of text with images or other data types.

- Strengths: Gemini excels in reasoning tasks and has shown strong performance across various benchmarks, making it suitable for complex applications. Its ability to handle large documents and perform data analysis further enhances its utility.

- Meta AI’s LLaMA:

- Best For: Research projects and academic use due to its open-source nature.

- Strengths: Performs competitively on various language tasks without relying on proprietary data sources, making it accessible for researchers.

- Anthropic’s Claude:

- Best For: Applications prioritizing safety and ethical considerations.

- Strengths: Designed to minimize harmful outputs while still providing accurate responses, making it ideal for sensitive applications.

- Mistral:

- Best For: Real-time applications requiring efficiency and speed.

- Strengths: Mistral models excel in programming and mathematical tasks while maintaining high performance on popular benchmarks. The Mistral Large model features a 32K token context window, allowing it to handle extensive documents effectively.

Summary of Benchmark Performance

Recent evaluations have highlighted how these models perform across various benchmarks:

- MMLU (Multitask Language Understanding): GPT-4 leads with an impressive score of around 88.70%, closely followed by Gemini with competitive performance in reasoning tasks.

- Reasoning Tasks (GPQA): Claude has shown strong performance with scores around 59.40%, while GPT-4 also performs well at about 53.60%.

- Multilingual Capabilities (MGSM): Both Claude and LLaMA scored around 91.60%, showcasing their effectiveness in handling multiple languages.

- Mistral Performance: Mistral Large has demonstrated top-tier performance across various benchmarks such as MMLU, achieving an accuracy of approximately 84%. It excels particularly in coding tasks like HumanEval and MBPP, showcasing its capabilities in programming alongside its strong reasoning skills.

This comparative analysis provides a clearer understanding of how each model operates and where they excel. By considering performance metrics, use case suitability, and key features, users can make informed decisions about which LLM best meets their needs. Recognizing these distinctions is essential as we continue exploring the capabilities of these advanced technologies.

Technical Features Comparison

Understanding the technical features of leading Large Language Models (LLMs) is essential for evaluating their capabilities and suitability for various applications. This section provides a comparison of key technical aspects, including architecture, parameter count, training data, and unique functionalities.

Architecture

Most modern LLMs are built on the transformer architecture, which utilizes self-attention mechanisms to process and generate text efficiently. Here’s how the leading models compare:

- OpenAI GPT-4:

- Architecture: Transformer-based autoregressive model.

- Strengths: Excellent at generating coherent text and handling complex prompts due to its deep learning capabilities.

- Google Gemini:

- Architecture: Also based on the transformer model, Gemini integrates multimodal capabilities, allowing it to process both text and visual data.

- Strengths: Designed for complex reasoning tasks and capable of handling large documents, making it suitable for a variety of applications.

- Meta AI LLaMA:

- Architecture: Transformer-based with a focus on efficiency and performance.

- Strengths: Offers competitive performance across various benchmarks while being open-source, allowing for community-driven improvements.

- Anthropic Claude:

- Architecture: Transformer-based with a strong emphasis on safety and ethical considerations.

- Strengths: Prioritizes minimizing harmful outputs while maintaining high accuracy in responses.

- Mistral:

- Architecture: Mistral employs a transformer architecture with unique enhancements like Grouped Query Attention (GQA) and Sliding Window Attention.

- Strengths: Designed for efficiency and speed, Mistral excels in real-time applications, particularly in programming and mathematical tasks. Its architecture allows it to handle long sequences effectively, making it suitable for complex queries.

Parameter Count

The number of parameters in an LLM significantly impacts its performance:

Model | Parameter Count |

OpenAI GPT-4 | Over 175 billion |

Google Gemini | Varies by version (up to 1 trillion) |

Meta AI LLaMA | Up to 65 billion |

Anthropic Claude | Varies by version |

Mistral | Up to 123 billion (Mistral Large 2) |

A higher parameter count generally allows for more nuanced understanding and generation of text, though it also requires more computational resources.

Training Data

The quality and diversity of training data play a crucial role in the effectiveness of LLMs:

- OpenAI GPT-4: Trained on a diverse range of internet content, providing a broad understanding of various topics.

- Google Gemini: Utilizes multimodal datasets that include both text and images, enhancing its ability to understand context in different formats.

- Meta AI LLaMA: Focuses on publicly available data, promoting transparency and accessibility for researchers.

- Anthropic Claude: Emphasizes safe interactions, with training data curated to minimize bias and harmful outputs.

- Mistral: Trained on multilingual datasets that enhance its versatility across languages. Mistral models are designed to perform well in coding tasks as well as general language understanding.

Unique Functionalities

Each model offers unique features that cater to specific needs:

- OpenAI GPT-4: Known for its creative writing capabilities and versatility in generating human-like text across diverse domains.

- Google Gemini: Its multimodal capabilities allow it to seamlessly integrate text with images or other forms of data, making it ideal for applications requiring rich context.

- Meta AI LLaMA: Being open-source allows developers to fine-tune the model to meet specific requirements, providing flexibility in deployment.

- Anthropic Claude: Built with a focus on ethical AI use, it incorporates mechanisms to reduce harmful outputs while maintaining high performance across various tasks.

- Mistral: Features advanced attention mechanisms like GQA that improve efficiency by reducing computational burden while capturing important patterns in data. Mistral also supports enhanced function calling capabilities, making it suitable for complex business applications.

This technical features comparison highlights the strengths and unique characteristics of each leading LLM. By understanding their architectures, parameter counts, training data sources, and special functionalities, users can make informed decisions about which model best fits their specific needs and applications. As the landscape of LLMs continues to evolve, recognizing these distinctions will be essential for leveraging their full potential in real-world scenarios.

Summary Table of Leading LLMs

Model | Performance Metrics | Use Case Suitability | Parameter Count | Training Data | Architecture | Unique Functionalities |

OpenAI GPT-4 | – High accuracy – Low perplexity | Creative writing, chatbots, coding assistance | Over 175 billion | Trained on diverse internet content | Transformer-based autoregressive model | – Excellent at generating coherent text – Versatile in generating human-like text – Robust API access for integration |

Google Gemini | – Strong reasoning | Multimodal tasks requiring text and images | Varies by version (up to 1 trillion) | Multimodal datasets including images and text | Transformer-based with multimodal capabilities | – Integrates text with images; handles complex queries – Designed for complex reasoning tasks |

Meta AI LLaMA | – Competitive scores | Research projects and academic use | Up to 65 billion | Focused on publicly available data | Transformer-based | – Open-source for customization – Community-driven improvements |

Anthropic Claude | – High user intent | Applications prioritizing safety and ethics | Varies by version | Curated to minimize bias and harmful outputs | Transformer-based | – Focus on ethical AI use – Mechanisms to reduce harmful outputs |

Mistral | – Strong performance | Real-time applications, programming tasks | Up to 123 billion | Trained on multilingual datasets | Transformer-based with GQA and SWA | – Advanced attention mechanisms (GQA) – Enhanced function calling capabilities |

This comprehensive table provides a clear overview of the leading LLMs, summarizing their performance metrics, suitability for various applications, parameter counts, training data sources, architectures, and unique functionalities. It serves as a valuable reference for users looking to compare these models effectively.

Pros and Cons of Each Model

When considering the adoption of LLMs, it’s essential to weigh their advantages against their limitations. Each model has unique strengths and weaknesses that can significantly impact their effectiveness in different applications. This section outlines the pros and cons of the leading LLMs, providing a comprehensive overview to help users make informed decisions.

OpenAI GPT-4

Pros:

- High Versatility: Excels in various tasks, including creative writing, coding assistance, and conversational agents.

- Human-like Text Generation: Produces coherent and contextually relevant text, making interactions feel natural.

- Robust API Access: Easy integration into applications through a well-documented API.

Cons:

- Costly Operations: High computational requirements lead to significant operational costs.

- Potential for Misinformation: Can generate incorrect or misleading information if not monitored.

- Lack of Transparency: The model operates as a “black box,” making it difficult to understand how it arrives at certain conclusions.

Google Gemini

Pros:

- Multimodal Capabilities: Can process and generate both text and images, enhancing its applicability in diverse scenarios.

- Strong Reasoning Skills: Performs well on complex reasoning tasks, making it suitable for analytical applications.

- Scalability: Designed to handle large datasets and documents efficiently.

Cons:

- Resource Intensive: Requires substantial computational power, which may be a barrier for smaller organizations.

- Data Privacy Concerns: Handling sensitive data necessitates robust privacy measures to protect user information.

- Bias Risks: Like other LLMs, it can perpetuate biases present in its training data.

Meta AI LLaMA

Pros:

- Open Source Accessibility: Being open-source allows for customization and community-driven improvements.

- Cost-effective for Research: Lower operational costs compared to proprietary models make it attractive for academic use.

- Competitive Performance: Delivers strong results across various language tasks.

Cons:

- Limited Commercial Support: May lack the extensive support and resources available with proprietary models.

- Dependence on Community Contributions: Updates and improvements rely on community involvement, which can vary in consistency.

- Potential for Biases: Outputs may reflect biases inherent in the training data.

Anthropic Claude

Pros:

- Focus on Safety and Ethics: Designed to minimize harmful outputs, making it suitable for sensitive applications.

- High User Intent Alignment: Strives to provide responses that align closely with user expectations and needs.

- Transparency Efforts: Works towards improving explainability in its outputs.

Cons:

- Performance Trade-offs: Emphasis on safety may lead to less creative or engaging text generation compared to other models.

- Variable Performance Across Tasks: While strong in some areas, it may not excel universally across all NLP tasks.

- Resource Requirements: Still requires significant computational resources, impacting accessibility for smaller organizations.

Summary Table of Pros and Cons

Model | Pros | Cons |

OpenAI GPT-4 | High versatility; human-like text generation; robust API access | Costly operations; potential for misinformation; lack of transparency |

Google Gemini | Multimodal capabilities; strong reasoning skills; scalability | Resource intensive; data privacy concerns; bias risks |

Meta AI LLaMA | Open source accessibility; cost-effective for research; competitive performance | Limited commercial support; dependence on community contributions; potential for biases |

Anthropic Claude | Focus on safety and ethics; high user intent alignment; transparency efforts | Performance trade-offs; variable performance across tasks; resource requirements |

This comprehensive overview of the pros and cons of each leading LLM provides valuable insights for users considering which model best fits their specific needs. By understanding these strengths and limitations, organizations can make more informed decisions about leveraging LLMs effectively.

Future Trends in LLM Development

The future of LLMs is poised for significant transformation as advancements in technology continue to evolve. With the growing adoption of LLMs across various industries, several key trends are emerging that will shape their development and application in the coming years. This section explores these trends and predictions for the future of LLMs.

- Fine-tuning for Specific Domains – One of the most notable trends is the increasing specialization of LLMs for specific industries such as healthcare, finance, and law. By fine-tuning models with domain-specific data, these LLMs can better understand industry jargon and perform tasks relevant to those fields, enhancing their effectiveness and accuracy.

- Enhanced Code Generation – LLMs are becoming invaluable tools for developers, assisting with code generation, autocompletion, and debugging. Future models are expected to offer even more sophisticated coding capabilities, streamlining the software development process and reducing repetitive tasks.

- Natural Language Programming Interfaces – The development of natural language programming interfaces will allow developers to express their ideas in plain English, which LLMs can then translate into code. This trend aims to democratize programming by making it more accessible to non-technical users.

- Multimodal Learning – Future LLMs will increasingly integrate various modalities—such as text, images, audio, and video—enabling them to understand and generate complex information across different formats. This capability will enhance their applications in fields like content creation, education, and interactive media.

- Explainable AI – As transparency becomes more critical in AI development, future LLMs will focus on explainability. Models will be designed to articulate their reasoning processes, helping build trust with users and allowing developers to understand how decisions are made.

- Democratization of Programming – LLMs are expected to lower barriers to entry in programming by providing intelligent assistance tailored to beginners. This trend could lead to a surge in new developers entering the field as LLMs simplify complex coding tasks.

- Collaborative Programming – The future may see LLMs acting as collaborative partners for teams of developers, streamlining communication and facilitating code reviews. This collaborative approach could enhance productivity and foster innovation within development teams.

- Real-Time Fact-Checking – Future LLMs will likely incorporate real-time data integration for fact-checking capabilities. This advancement would enable models to provide up-to-date information rather than relying solely on pre-trained datasets, enhancing their reliability.

- Synthetic Training Data – Research is underway to develop LLMs that can generate their own synthetic training data sets, allowing for continuous improvement without extensive human intervention. This capability could streamline the training process and enhance model performance.

- Sparse Expertise Models – Emerging sparse expert models will allow LLMs to activate only relevant parts of their neural networks based on specific tasks or prompts. This approach can improve efficiency and reduce computational costs while maintaining performance.

The future of LLM development is bright, characterized by advancements that promise to enhance their capabilities and broaden their applications across various domains. As organizations increasingly adopt these models, responsible development practices will be essential to ensure ethical deployment and mitigate potential biases. With ongoing research and innovation, LLMs are set to revolutionize how we interact with technology and each other in profound ways.

Embrace the Future of AI with LLMs

The landscape of Large Language Models (LLMs) is rapidly evolving, bringing forth transformative capabilities that enhance how we interact with technology and each other. From creative writing and coding assistance to complex reasoning and multimodal understanding, LLMs like OpenAI’s GPT-4, Google’s Gemini, Meta AI’s LLaMA, Anthropic’s Claude, and Mistral are redefining possibilities across various industries.

As we look to the future, trends such as fine-tuning for specific domains, enhanced code generation, and the integration of multimodal learning will further expand the applications of LLMs. These advancements promise to make AI more accessible and effective, allowing users to harness its power in innovative ways.

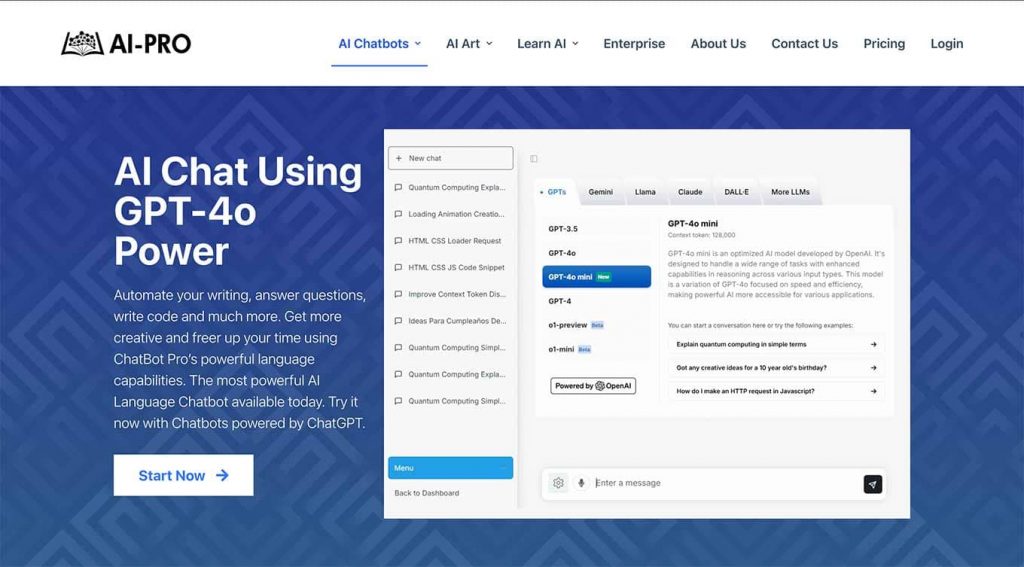

To experience the capabilities of these advanced models firsthand, we encourage you to explore AI-Pro’s ChatBot Pro. This innovative platform allows you to interact seamlessly with multiple LLMs, including GPT-4, Claude, Gemini, and more—all within a single app. Whether you’re looking to generate creative content, obtain detailed information, or engage in casual conversation, ChatBot Pro provides a versatile and user-friendly interface.

Don’t miss out on the opportunity to elevate your interactions with AI. Try ChatBot Pro today and discover how these cutting-edge models can enhance your productivity and creativity!